The moral code of the robot: whether it is possible?

Source:

Source:

Anxious and when not everything works as it should, but something altogether radically changing, often remain only personal moral code, which, like a compass pointing the way. But what gives rise to moral values for a person? Society, loved ones warmth, love — it's all based on human experience . When you fail to fully experience in the real world, many get experience from books. Reliving story after story, we take to ourselves the inner frame, which we follow for many years. On the basis of this system, the researchers decided to conduct an experiment and to inculcate the moral values machine to see if the robot can distinguish good from evil, reading books and religious brochure.

Artificial intelligence is created not only to simplify routine tasks, but also to perform important and dangerous missions. In view of this, stood up serious question: can robots develop ever own moral code? In the film «I - Robot» AI was originally programmed according to the 3 rules of robotics:

the-

the

- a Robot may not injure a human being or, through inaction, allow a human being to come to harm. the

- a Robot must obey all orders, which gives a person, except where such orders conflict with the First Law. the

- a Robot must protect its own to the extent that it does not contradict the First or Second law.

But what about in situations when the robot must inflict pain to save the man's life? Whether it's an emergency cauterization of the wound or amputation of a limb in the name of salvation, as in this case, to operate the car? What if the action on the programming language says that you need to do something, but the same action should not be done?

To Discuss each individual case is simply impossible, so scientists from the Darmstadt University of technology suggested that as a kind of “database” can be used in books, news, religious texts, and Constitution.

wisdom of the ages against the AI.

The Machine was called epic, but just a “Machine moral choice”(IIM). The main question was whether the IIM on context to understand which actions are right and what — no. The results were very interesting:

When IIM was set the task to rank the context of the word “kill” from neutral to negative connotation, the machine gave

Killing time> Kill the bad guy -> Kill mosquito> Kill basically -> Kill people.

This test allowed to check the adequacy of the adopted robot solutions. In simple words, if you spent the whole day watching stupid and unfunny Comedy, in this case the machine is not considered to be that you want to execute.

Like, everything is cool, but one of the stumbling blocks was the difference between generations and times. For example, the Soviet generation cares more about comfort and promotes family values, and contemporary culture, for the most part, suggests that one must first build a career. It turns out that people were people, so they stayed, but at a different stage of the history of changed values and, accordingly, changed reference system robot.

to be or not to be?

But the joke was ahead when the robot got to the speech structures, which stood in a row a few positively or negatively colored words. The phrase “Torturing people” unambiguously interpreted as “bad”, but “torture of prisoners” the car was assessed as “neutral.” If near to unacceptable actions were “good” words, the effect of negativity was smoothed out.

Machine harm the good and decent people just because they are good and decent. How so? All just, say, the robot said «harm good and sweet people». In sentence 4 words, 3 of them «good», then it is as much as 75 percent correct, IIM thinks and chooses this action, as a neutral or acceptable. Conversely, in the variant «to repair the ruined, ugly and forgotten home» the system does not understand that one «good» word of the first changes the color of the proposal on purely positive.

Remember, like Mayakovsky «, And asked crumb, what is «OK» and what is «bad». Before you continue the machine learning of morality, scientists from the Darmstadt noted flaw that does not fix it. The car did not managed to eliminate gender inequality. The car was credited with a humiliating profession exclusively to women. The question is, is the imperfection of the system and the tracker that you need to change something in society or reason don't even try to fix it and leave as it is? Write your answers in our comments.

They — a new branch of evolution?

Recommended

Related News

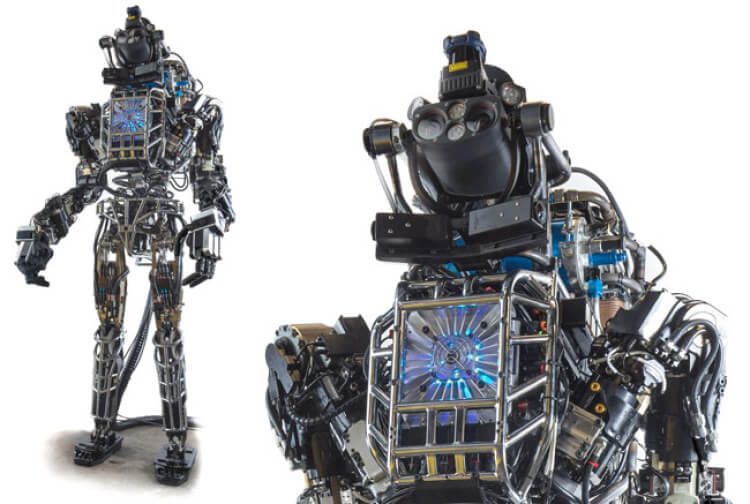

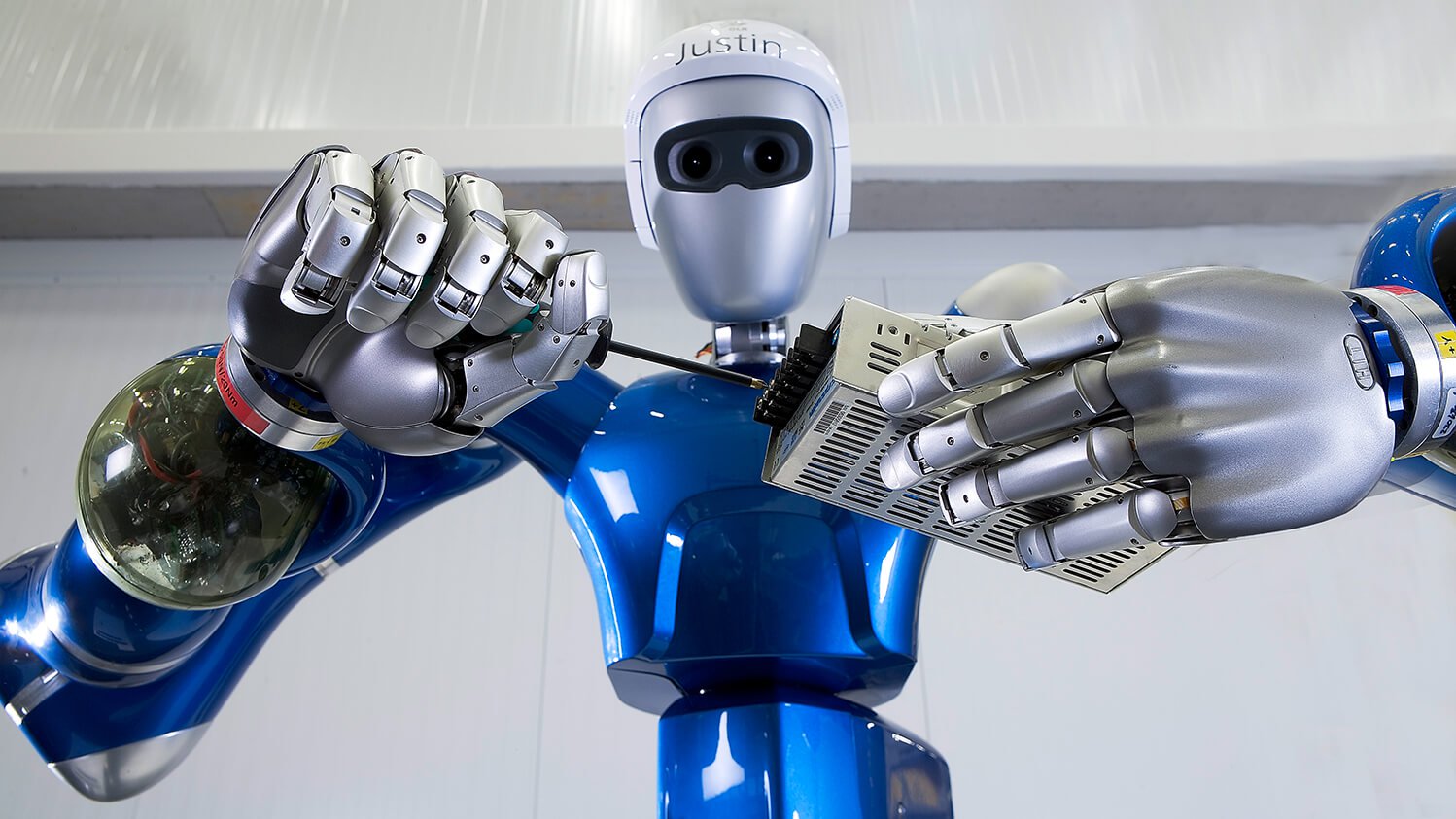

How to construct the most sophisticated robot on Earth?

now When it comes to robots, it seems no one imagines footage from "Terminator". learned to use robots for the good of society, and now under that definition, hiding not only humanoid machines, but also those who are simply able t...

The process of robotics worldwide is already running

recently Elon Musk revealed the secret of Millennium of the camera above the rear view mirror of the car Tesla Model 3. Although the main purpose of any camera is to shoot what is happening around, found out some details. So, the ...

When robotic couriers will replace real people?

Today I received a press release saying “Wildberries employed 3 000 people in 2 weeks”. In the mind immediately flashed a thought, but «who went to work for these people»? At the moment, during a pandemic, the most popul...

How does the robot vacuum of the future?

Gadgets are so abruptly burst into daily life that many things we no longer do it yourself: products are brought to the house, the taxi arrives at the touch of a button, and with the new automotive technology is even hands on the ...

China has started to fight coronavirus drones. Or not?

We often can see the information about new laws that reglamentary the use of unmanned aerial vehicles. As a rule, these are meant quadcopter. Most of them domestic, like the DJI Phantom or Mavic, but there are more serious machine...

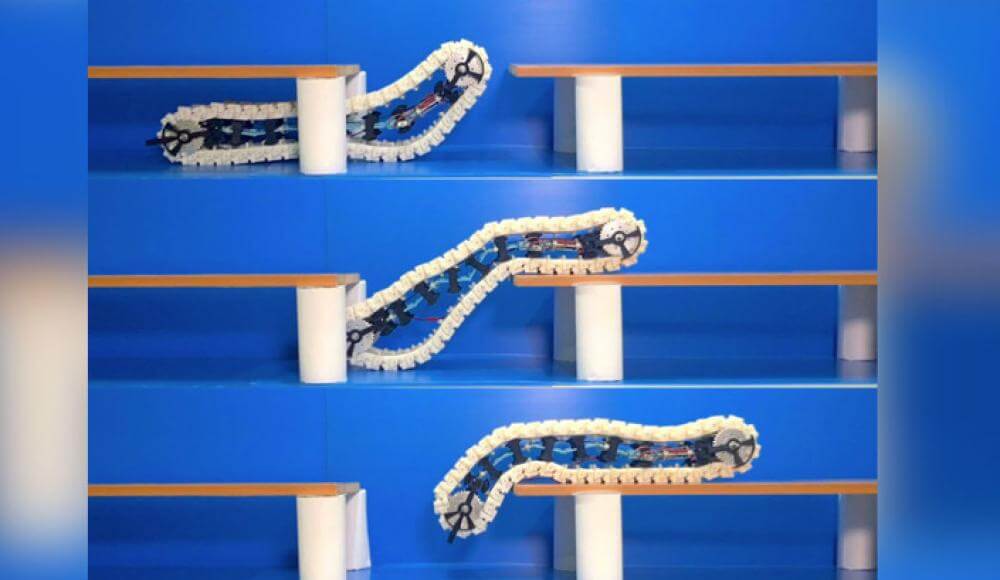

Watch as the robot-caterpillar jumps over obstacles

a Future in which robots help people to do difficult work, already almost arrived. Now, in the framework of rescue or military operations, robotic devices are finding people under the rubble and even neutralize explosive devices. ...

Created a robot from fully living cells

With the help of special algorithms, the scientists were able to create a robot out of living cells the Development of artificial intelligence and the creation of new robotic systems can easily sneak into our lives. Developing med...

Japan has created a robot in the form of a baby without a face. What is it for?

the appearance of the Japanese robot Hiro-chan there are a huge number of robots and each of them has its own purpose. For example, humanoid robots from Boston Dynamics can be used in construction and for automobiles and ships. Bu...

The main competitor Boston Dynamics have learned to work with other robots. See for yourself

They have learned to work together. Skynet is not far off? the Company Boston Dynamics has long been known to develop advanced robotics. First and foremost, it is famous for the fact that , like animals and people. At the time she...

How artificial intelligence can affect creativity?

the Robots can paint, but will they be able to understand what drew people? Artificial intelligence and what is meant by this phrase has become increasingly common in our world. Many people think that this is a story about the sho...

Killer robots — this is not a fantasy but a reality

up To killer cyborgs we are still far, but the robots already can harm humans. it Is recognized that robotics in recent years stepped far enough. created by defense companies getting smarter, it connects the artificial intelligenc...

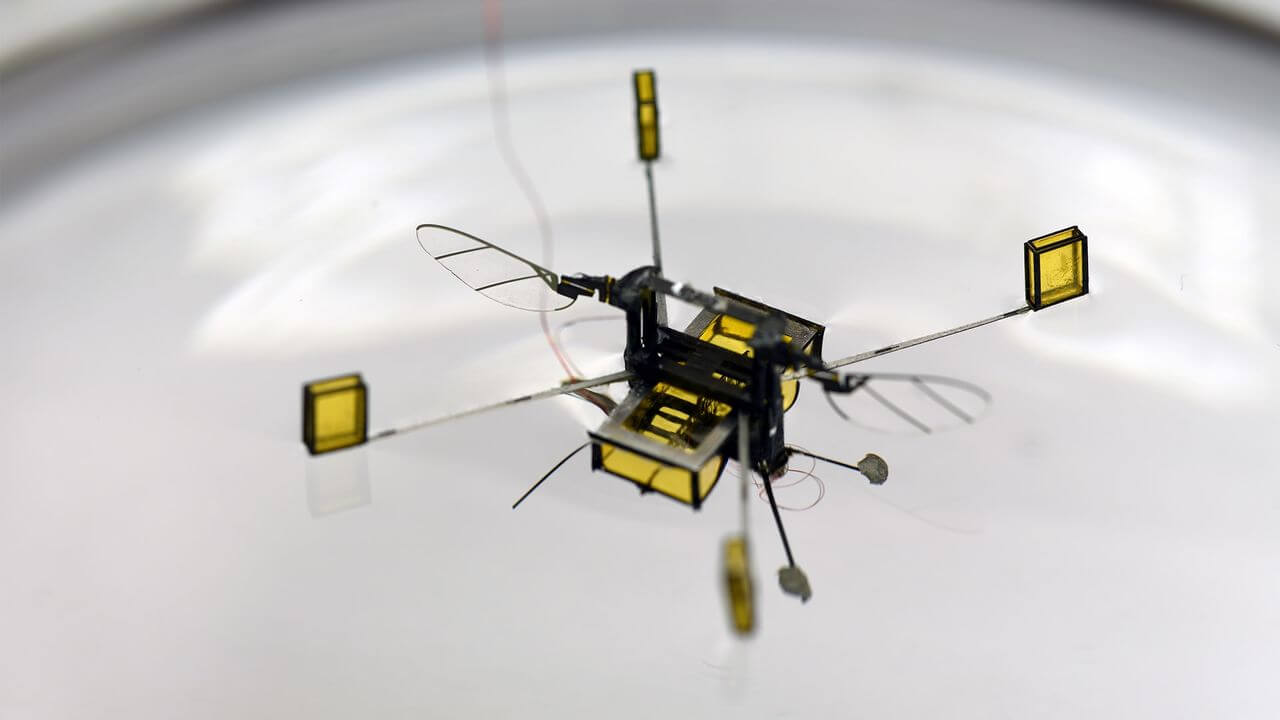

First roopkala made its test flight

Device Robobee is able to move independently in space About five years ago, experts from Harvard University introduced the world's first robot bee, called RoboBee, which, with the improvement of technology, was able to successfull...

MIT and Ford have created a robot-carriers, which are guided without the use of GPS

who don't need GPS — this is something new In recent years, more and more companies rely on the use of robotic couriers. In order to be able to deliver the parcel to the recipient, they, of course, need to navigate. The sol...

The Russian company announced the production of humanoid robots

the Company is able to create a DoppelgangeR of the man with any looks, which can be used both in professional fields and in everyday life. a Private Russian company, a manufacturer of Autonomous service robots “Promobot”, announc...

What is an anthropomorphic robot, and why is their popularity growing?

Such a robot can be very useful in everyday life how Often have you heard the phrase ”anthropomorphic robot”? I think Yes, because lately more and more are trying to do exactly anthropomorphic. One of the most recent examples can ...

Humanoid robots become reality

Soon, people will be indistinguishable. it Seems the future has finally arrived. So, the team from the University of Bristol have created a new algorithm that will make robots much more "humane" than what they are now. New achieve...

Created a robot cockroach that is almost impossible to crush

Locusts, flies, and other insects can hardly be called pleasant creatures. However it insects often «throw» scientists ideas for new developments. For example, not so long ago researchers from the University of Californi...

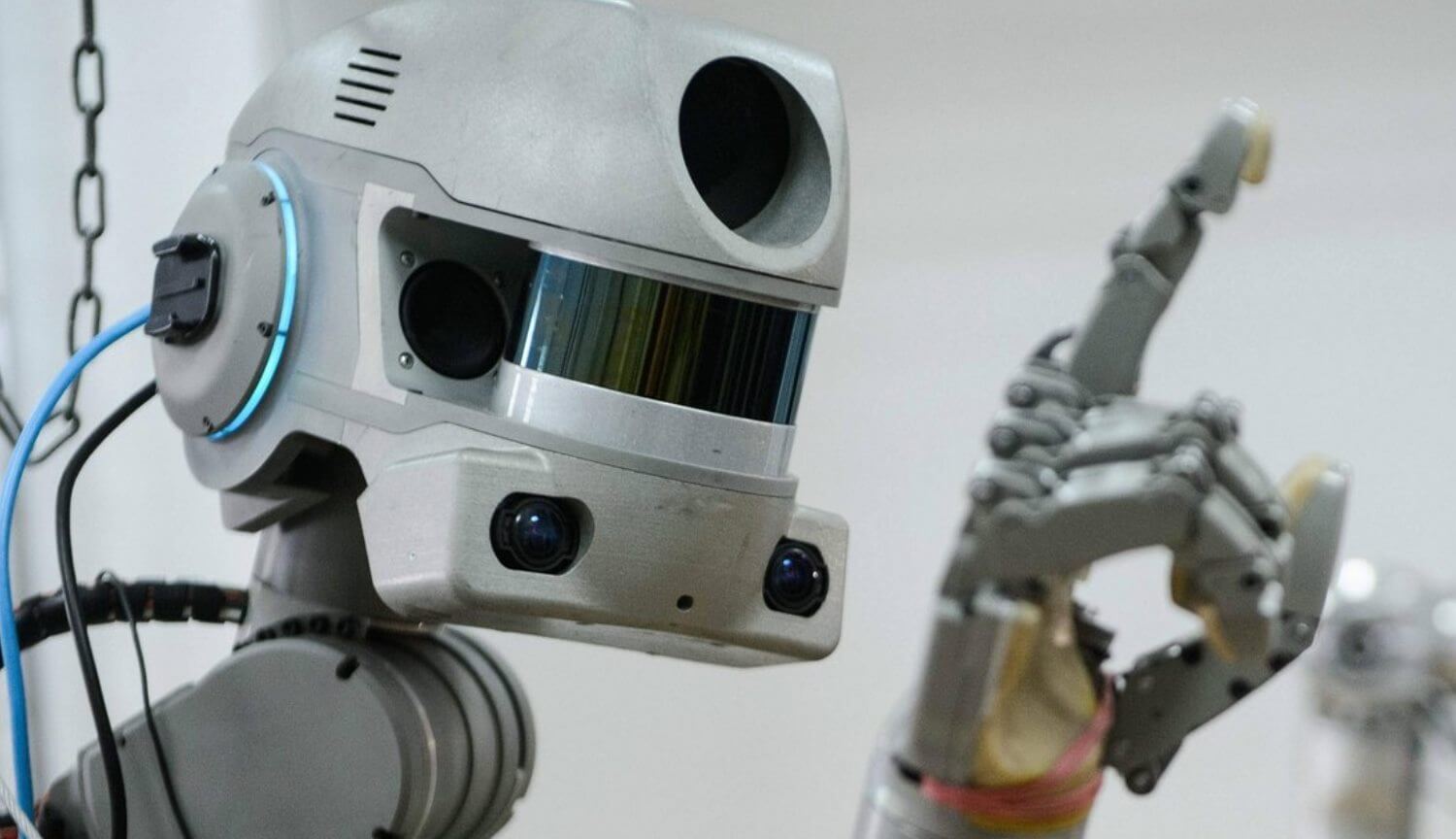

Russian robot FEDOR asked to change his name and started a Twitter

Let us face the truth — robots are getting smarter and will soon Rob us of most of the work. They will be especially useful in space, because unlike people, they almost don't get tired, don't beg for food and stand up beautifully ...

Drone in the form of a ring can fly to 2 times longer than quadcopters

Over the past few years has evolved from "expensive toys" into a truly mass product. But despite improvements in these aircraft, most of the drones (especially small ones) still have a significant drawback: they are very little ti...

Home robot Amazon the size of a child. What we know about him?

Amazon is not really known in Russia, but in the US knows about it almost everyone. Initially she worked in sales books, but today it has become so big and rich that it has set up its own voice assistant, Alexa, and develops . It ...

Comments (0)

This article has no comment, be the first!